Section: New Results

Semi-supervised Understanding of Complex Activities in Large-scale Datasets

Participants : Carlos F. Crispim-Junior, Michal Koperski, Serhan Cosar, François Brémond.

keywords: Semi-supervised methods, activity understanding, probabilistic models, pairwise graphs

Informations

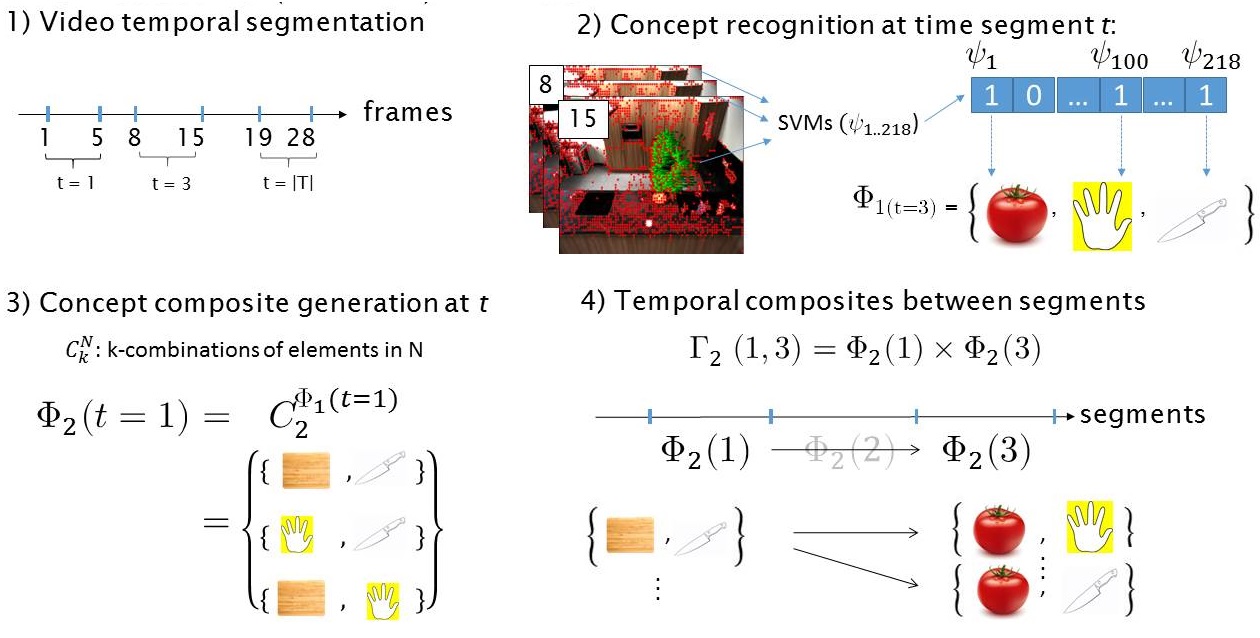

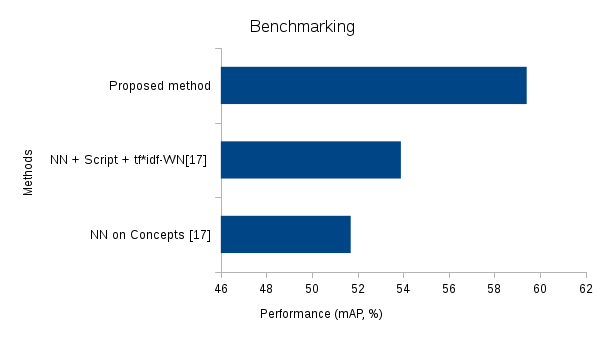

Methods for action recognition have evolved considerably over the past years and can now automatically learn and recognize short term actions with satisfactory accuracy. Nonetheless, the recognition of complex activities - compositions of actions and scene objects - is still an open problem due to the complex temporal and composite structure of this category of events. Existing methods focus either on simple activities or oversimplify the modeling of complex activities by targeting only whole-part relations between its sub-parts (e.g., actions). We study a semi-supervised approach (Fig. 13) that can learn complex activities from the temporal patterns of concept compositions in different arities (e.g., “slicing-tomato” before “pouring_into-pan”). So far, our semi-supervised, probabilistic model using pairwise relations both in compositional and temporal axis outperforms prior work by 6 % (59% against 53%, mean Average precision, Fig. 14). Our method also stands out from the competition by its capability to handle relation learning in a setting with large number of video sequences (e.g., 256) and distinct concept classes (Cooking Composite dataset, 218 classes, [90]), an ability that current state-of-the-art methods lack. Our initial achievements in this line of research has been published in [31]. Further work will focus on learning relations of higher arity.